Imagine your users are trying to use your app, but delays and crashes are making the experience unbearable. You’ve already tuned the code to run faster, yet the issues persist. What would you do next?

For any application, especially one designed for multiple users, poor performance isn’t just an inconvenience — it’s a potential business killer.

At Builder.ai, we encountered this very challenge with our Builder Tracker application.

Builder Tracker, a crucial tool for our internal teams, was struggling to handle the increasing usage, especially during peak times. As our business scaled, the application struggled to keep up. This caused unacceptable delays that hindered productivity and efficiency.

To tackle this issue, we turned to load testing. Load testing helped us simulate high-traffic scenarios so that we could pinpoint performance bottlenecks and implement targeted, incremental improvements.

In this blog, we’ll walk you through our load testing journey and share how we dramatically improved Builder Tracker performance.

Let’s dive in 👇

What is Builder Tracker?

Builder Tracker is our internal tool designed to streamline the software development process. It is used by our Project Managers to assign tasks, track progress and communicate efficiently with experts — teams of designers, developers and QA specialists. The experts, in turn, use Builder Tracker to understand task requirements and execute them.

Think of it as a centralised tool that ensures smooth execution from start to finish. It allows us to deliver high-quality software that’s aligned with customer expectations.

Challenges faced by Builder Tracker

With thousands of Buildcards live on Builder Tracker, ensuring flawless performance is absolutely critical. Any downtime or glitches could significantly disrupt our operations and pose a serious risk to our business.

Here are some of the specific challenges Builder Tracker encountered 👇

1 - Slow response times

Users were experiencing significant delays and sluggish responses, particularly during high-traffic periods. This was affecting productivity and causing frustration within teams.

For instance, simple tasks like creating or updating user stories were taking much longer than expected, leading to a poor user experience.

2 - Site downtime and unavailability

During peak usage times, Builder Tracker would sometimes go down or become unresponsive. This was especially problematic when teams from different regions, such as the UK, Dubai and India, were accessing the application simultaneously.

Users reported that the site was slow or not working, which disrupted their workflow and caused dissatisfaction.

3 - Improper resource allocation

As our business scaled, the number of users and the load on the Tracker application increased significantly. This increased performance issues, as the system wasn't allocated enough resources to handle such high volumes of traffic efficiently.

The growing user base puts immense pressure on the application, leading to frequent performance bottlenecks.

Why was load testing the right approach for us?

When faced with such challenges, the natural inclination might be to measure and tune the response time for each part of the software.

However, while performance testing measures how well software performs under its expected workload, we needed an approach that could go one step further and help us identify how it’ll behave under increasing stress.

This is where load testing helped us to:

- Simulate real-world conditions - by replicating high-traffic scenarios, we could identify bottlenecks that only emerge during heavy usage.

- Enable targeted optimisations - it pinpointed when and where slowdowns occur, helping us implement precise fixes.

- Scale for the future - as our business scales, load testing helps us prepare systems for future growth and avoid crashes.

How we conducted load testing for Builder Tracker

Now that you understand the challenges and why we chose load testing, here’s a step-by-step process on how we conducted it 👇

1 - Define the goal

Our primary goal was to make sure that Builder Tracker could handle a high number of concurrent users without performance degradation.

Specifically, we aimed for the system to support at least 500 users at any given time, as this was more than the number of users we expected to be online at any one time.

2 - Preparing the test environment

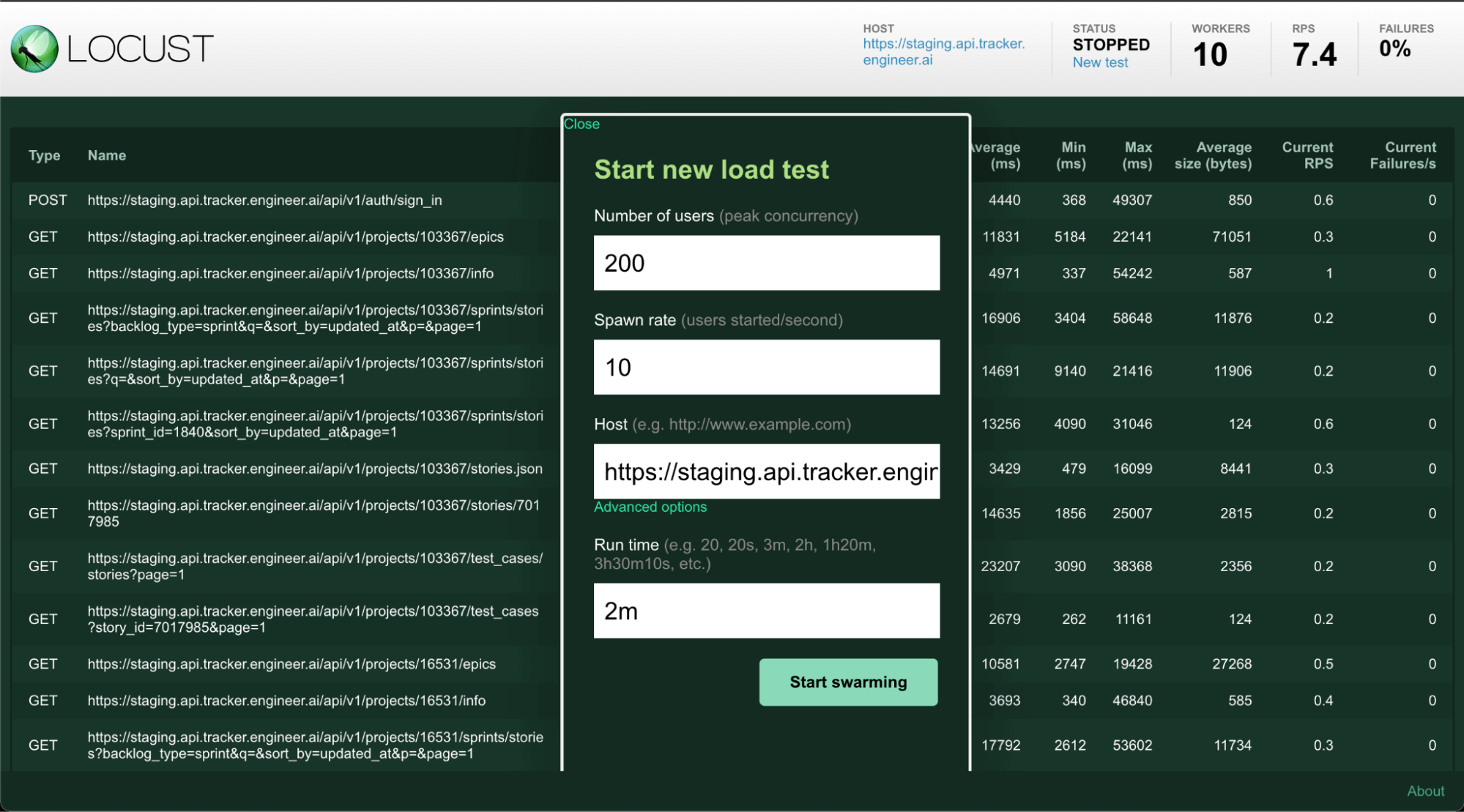

To simulate real-world scenarios, we chose Locust as our load testing tool, which allowed us to model user behaviour and measure performance under stress.

All testing was conducted in a staging environment to prevent disruptions to the live system.

Additionally, at this time, we created a dataset to simulate real-world usage patterns, making sure our tests were both rigorous and relevant.

3 - Initial dry run

A dry run is the preliminary test done to see how an app performs under basic conditions. It essentially helps you establish a baseline.

In our initial dry run, we performed 2 load tests with 350 users to understand how well the Tracker application could handle stress.

- In the first load test, we set up a small, light environment with a minimum of 4 pods, autoscaling up to 20 pods with 10 threads/pod. The results revealed that out of 10,000 calls, 7,400 calls weren't served, indicating a 74% failure rate. This showed that Tracker struggled under too much load and required significant reconfiguration.

- For the second load test, we bumped the configuration to a minimum of 8 pods, autoscaling up to 25 pods with 16 threads/pod. This time, out of 10,000 calls, only 140 calls weren't served, bringing the failure rate to just 1.4%. The significant reduction in failures indicated that the application could handle the load better with this configuration.

4 - Incremental load testing

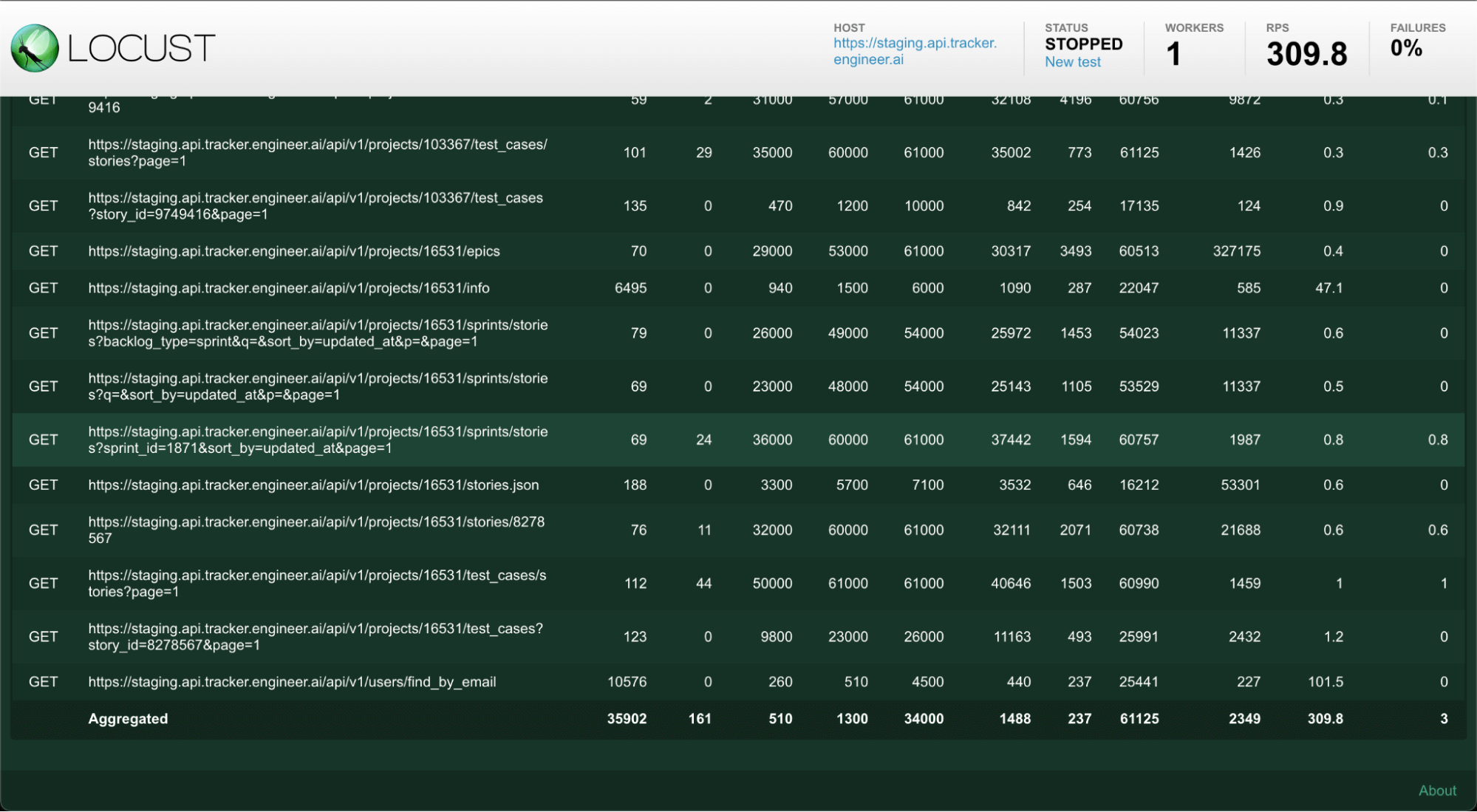

With a baseline established, we adopted an iterative approach where we conducted a series of tests, increasing 100 users each time and gradually progressing our way up to 650 users.

For each test, we adjusted system configurations, including pods and thread settings to optimise performance.

During the final test, the team configured the system with a minimum of 5 pods, autoscaling up to 30 pods with 16 threads/pod, simulating 650 users.

The result was remarkable — a 0% failure rate. This shows that Builder Tracker can now handle 650 concurrent users, without any issues.

Best practices to implement load testing

Here are some of the key takeaways we learned from implementing load testing.

1 - Optimal resource utilisation

It’s tempting to overengineer, like allocating resources for 5,000 users when usage patterns only need resources for 1,000 users.

To avoid this, you need to balance resource allocations like CPU and memory limits with actual needs and optimise them to handle demands efficiently. This avoids overprovisioning.

By optimising resource utilisation, systems can handle high loads effectively and minimise unnecessary costs.

2 - Create a staging environment

When running load tests, always use a staging environment that’s separate from your production setup. This gives you the freedom to experiment and fine-tune performance without disrupting real users or risking downtime in live systems.

Always make sure your staging environment is as close as possible to the live system. That means matching the same hardware, software and network configurations your production environment uses. By doing this, you’ll get results that accurately reflect how your application will behave under load.

3 - Incremental testing

It’s essential to start with a baseline load and incrementally increase it, until the system starts showing signs of degradation. This helps in understanding systems capacity and making targeted improvements.

For example, we began with 100 users and gradually progressed our way up to 200, 300, 400, 500 and 650 users.

This method allows for a more controlled and systematic approach to identifying performance bottlenecks.

4 - Continuous improvement

Load testing isn't simply a one-time activity but an ongoing process. As loads and system requirements evolve, continuous monitoring and optimisation are essential to maintaining performance.

To do this, you can use monitoring tools to track KPIs, such as response times, error rates, resource utilisation and user satisfaction scores. For example, if the error rate spikes at a certain load, find the cause and make the necessary adjustments.

This helps you make sure that your application can handle increasing user loads over time and proactively addresses performance issues before they impact users.

Conclusion

Load testing has been a game-changer for Builder Tracker. It helped us tune the infrastructure to provide a fast and reliable application that could handle our growing number of users.

As we continue to keep an eye on its performance and make targeted optimisations, we're confident that Builder Tracker will keep evolving to meet our business needs and support seamless productivity as we grow.

If you’re interested in learning about how Builder.ai keeps software running at its peak, get in touch with us by clicking the banner below 👇

Create robust custom software today

100s of businesses trust us to help them scale.

Book a demoBy proceeding you agree to Builder.ai’s privacy policy

and terms and conditions

Lalit Kumar Maurya is a Senior Technical Lead at Builder.ai with over 12 years of experience in software development and leadership. He excels in architecting scalable solutions, managing large technical teams and developing full-stack web applications. Lalit's expertise includes Ruby on Rails, Angular, Docker, Kubernetes and databases, strongly emphasising robust architectures and scalability. His technical proficiency and leadership make him a key contributor to delivering complex projects successfully.

Facebook

Facebook X

X LinkedIn

LinkedIn YouTube

YouTube Instagram

Instagram RSS

RSS